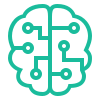

I was contacted by a company who is building a camera sensor for mobiles that infers depth information and RGB in a single lens. They wanted to reconstruct objects in an automated way from different photos (as less as possible) and using deeplearning.

An initial prototype was builded in python but achievements weren’t very satisfying. I’m not sure if due to a lack of accuracy from the sensor or if more photos were needed but the deeplearning pipeline was capable of reconstruct some 3d scenes from the D-RGB images. A posteriori they migrated it to C++ and improve the accuracy of the sensor.

Technology stack: Open3D, python