First of all, once again, let me apologise for my titling, I am starting to think that it is a bad habit acquired after too many years working in media companies. Secondly, I wasn’t aware of how easy is to fall in biased deep learning until I saw it with my eyes and thirdly it is not somekind of racist AI it is just a malfunctioning software that should be corrected.

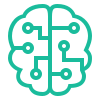

So, please let’s forget about giving human attributions to computing software and let’s move to correct it. I build a deeplearning pipeline for an automotive company startup in Barcelona. A company that merges computer vision and cognitive perception to raise awareness and promote good driving practices. The deeplearning model works smoothly in an aligned image from the driver in fact accuracy rises above 95% in the test set. But, as I never was happy with such great results I constantly searched for overfitting. But, he really never came to me… Searching for images that can trick my model with poses and actors that wasn’t in the training set (beards, sun glasses, extreme poses, bad illuminations etc…) I found a dataset with disguised people and luckily some black people. Sadly, very sadly the results were surprising to me

The disturbing implications of algorithmic biased happened to me. Testing the inference with others races this problem did not happen so, it is not just a simply problem that can be corrected with more training samples or less white racist engineers as it is titled in this article (such misinforming articles hurts twice when they come from The Guardian). Nowadays, it is a problem of awareness as it is a non intentioned bias. I insist, is not just a problem of training datasets, some samples were never showed to the network and very well predicted. I read dozens of biases algorithms news articles but did pay attention to them until it happened to my work. Writing my thesis, I found some disturbing research papers like this one “Automated Inference on Criminality using Face Images” and I found it very disturbing, the first thoughts that came to my mind were what will happen having this software in United States were according to the Bureau of Justice Statistics, one in three black men can expect to go to prison in their lifetime. I hope they use my biased neural network 🙁 unfortunately, this will not happen in this case AI will just increase their odds.

Thinking in this research paper and in my malfunctioning software I finished with a final conclusion. Knowing that in software architecture we have some principles like “security by design” why not start having others like “ethical by design” ?